Share this post

At a time when trust can be forged by an algorithm, seeing and hearing are no longer believing. Generative AI now makes it possible to fabricate realistic images, voices, and videos in seconds. What once required a Hollywood studio can be done on a laptop - and the results are nearly impossible to distinguish from the real thing.

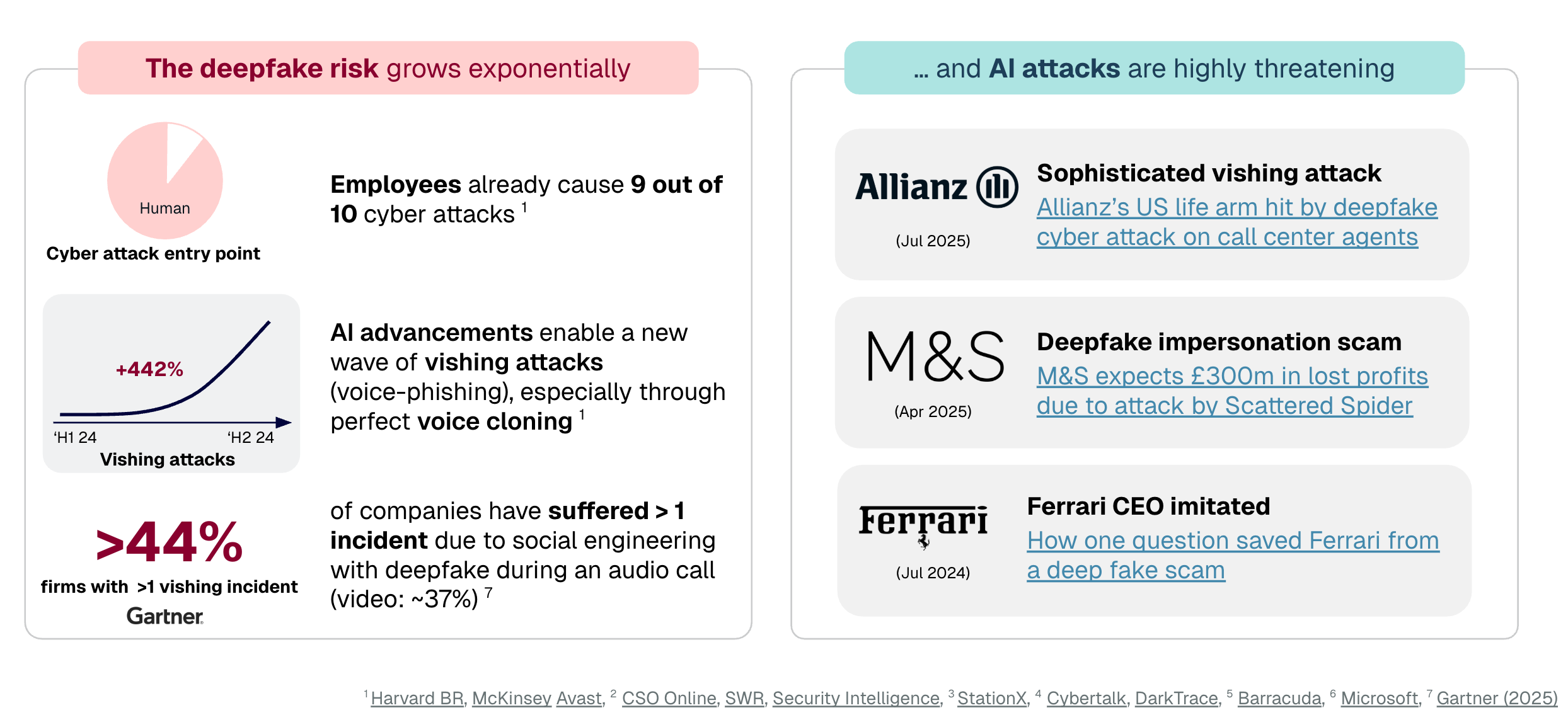

Tools like ChatGPT, ElevenLabs, and deepfake generators have turned synthetic media into an everyday technology. That accessibility is reshaping cybersecurity. According to Microsoft’s Digital Defense Report 2024, nine out of ten cyber incidents still come down to human behavior. Gartner (2025) adds that 44% of organizations have already faced voice phishing (vishing) attempts. The next wave of social engineering is no longer text-based - it sounds and looks like someone you know.

In early 2024, British engineering firm Arup became one of the first public deepfake victims. An employee joined what appeared to be a routine video call with their CFO. Only later did investigators realize that every participant on the screen - including the CFO - had been AI-generated. The attackers walked away with $25 million.

“Like many other businesses around the globe, our operations are subject to regular attacks, including invoice fraud, phishing scams, WhatsApp voice spoofing, and deepfakes. We have seen that the number and sophistication of these attacks has been rising sharply.”

— Rob Greig, CIO at Arup

In mid-2025, the hacker group Shiny Hunters (UNC6040) used AI-cloned voices to impersonate Salesforce support staff in a large-scale vishing campaign, tricking employees into granting system access and exposing sensitive customer data. Attackers no longer need to hack your system, they just need to sound like someone you trust.

While enterprises are targeted with increasingly sophisticated fraud, individuals face emotional manipulation at a personal level. Criminals now clone the voices of loved ones or colleagues to create panic and urgency - prompting victims to act before thinking.

1. Set a family “safeword”: a private code for real emergencies.

2. Ask a question only they’d know: something personal and verifiable.

3. Switch communication channels: hang up and confirm via another trusted method.

4. Pause for 20 seconds: urgency and fear are tools of manipulation.

5. Never share credentials: legitimate organizations will never ask for PINs or passwords.

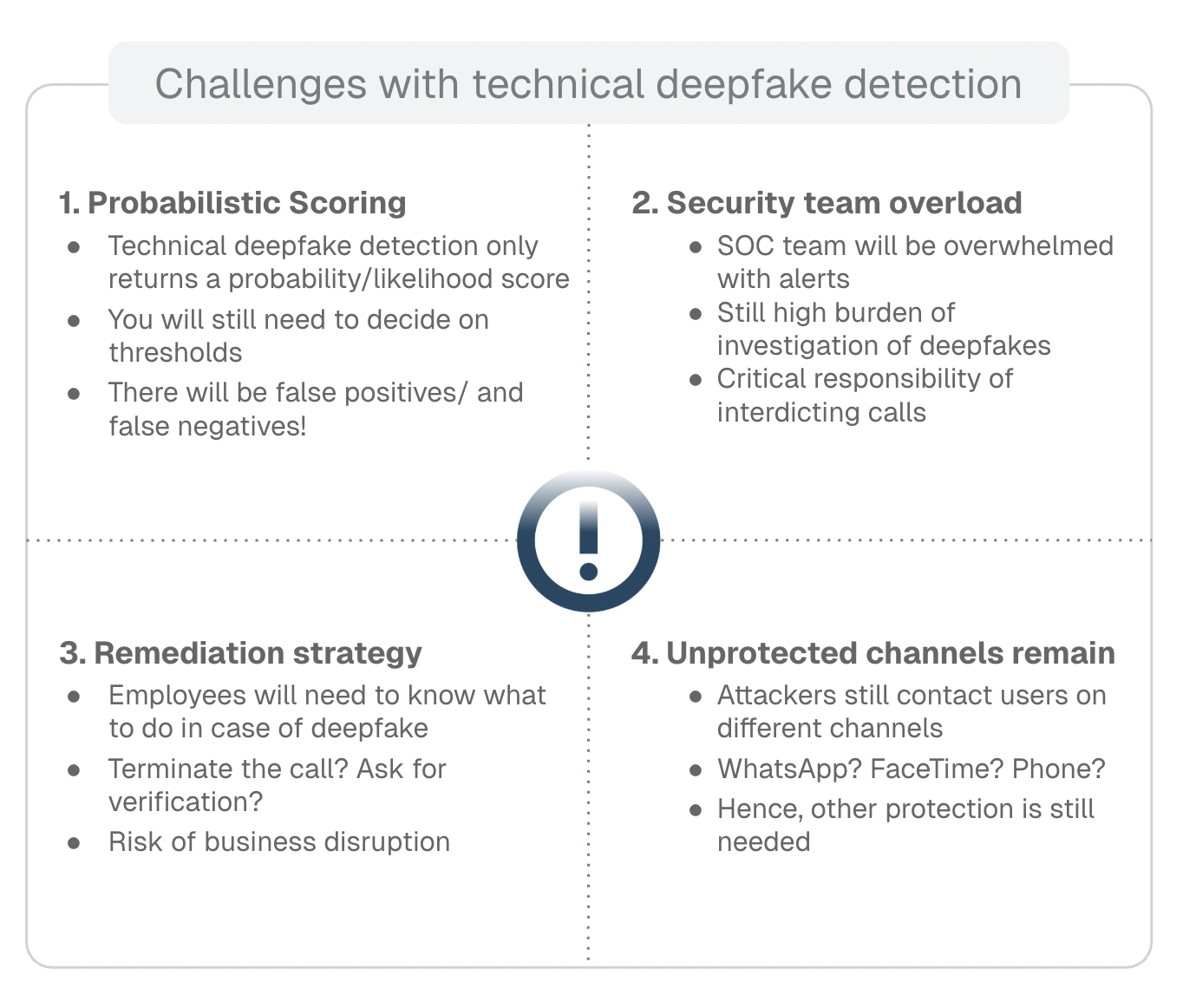

AI-based deepfake detection tools analyze speech patterns, pixel data, and metadata to flag manipulated content, but they’re always one step behind. Every new generative model forces defenders to retrain detection systems, creating a constant arms race.

Even worse, detection tools deal in probabilities. Is a voice 90% fake? 99%? That decision can mean the difference between a false alarm and a major breach. And outside official platforms like Microsoft Teams or Zoom, most communication - WhatsApp, mobile calls, Telegram - remains completely unmonitored. That’s where attackers thrive.

Technology can flag suspicious content, but only people can stop manipulation in action. Awareness and readiness remain the decisive factors.

Modern awareness training is about realism. Immersive simulations prepare employees for real-world attacks before they happen. At revel8, this means creating AI-driven attack scenarios that mimic the tone, timing, and context of genuine threats - from deepfake CEO calls to urgent “support” requests.

By combining OSINT-based risk profiling, adaptive learning, and behavioral insights, revel8 helps organizations build what technology alone can’t: human instinct.

Because they look and sound real. AI-generated voices and videos recreate the exact cadence, tone, and appearance of trusted people, lowering skepticism and bypassing rational checks.

Some can, but attackers evolve faster than detection models. The most reliable strategy combines technology with continuous human awareness.

Through interactive simulations that replicate real-world attack behavior, not theoretical slides or boring videos. When employees experience the pressure and emotional cues firsthand, they learn to respond instinctively.

Detection technology is improving fast and it’s a critical layer of defense. But deepfakes are evolving just as quickly, which means detection tools can’t operate in isolation. Each new generation of generative models lowers detection accuracy until algorithms are retrained. The strongest protection combines technical detection with human awareness, so employees know how to respond even when AI filters miss a threat.

End the call or interaction immediately, verify through another trusted channel, document the incident, and inform your IT or security team.

AI will continue to blur the line between real and fake. The defining factor of strong cybersecurity isn’t just better technology - it’s better-prepared people. The strongest companies are those where every employee understands that trust itself can be hacked.

Stay alert. Train smart. Detect deepfakes before they detect you.