Share this post

We are entering an era where audio and video are no longer reliable proof of identity. Just as we learned over the past decades that photos can easily be manipulated, we are now discovering the same about voices and video.

Thirty years ago, you might have believed in the existence of the Yeti if someone showed you a clear photo. Today, everyone knows that pictures can be faked. But when it comes to voices and phone calls, most people still assume: “If I hear it, it must be real.”

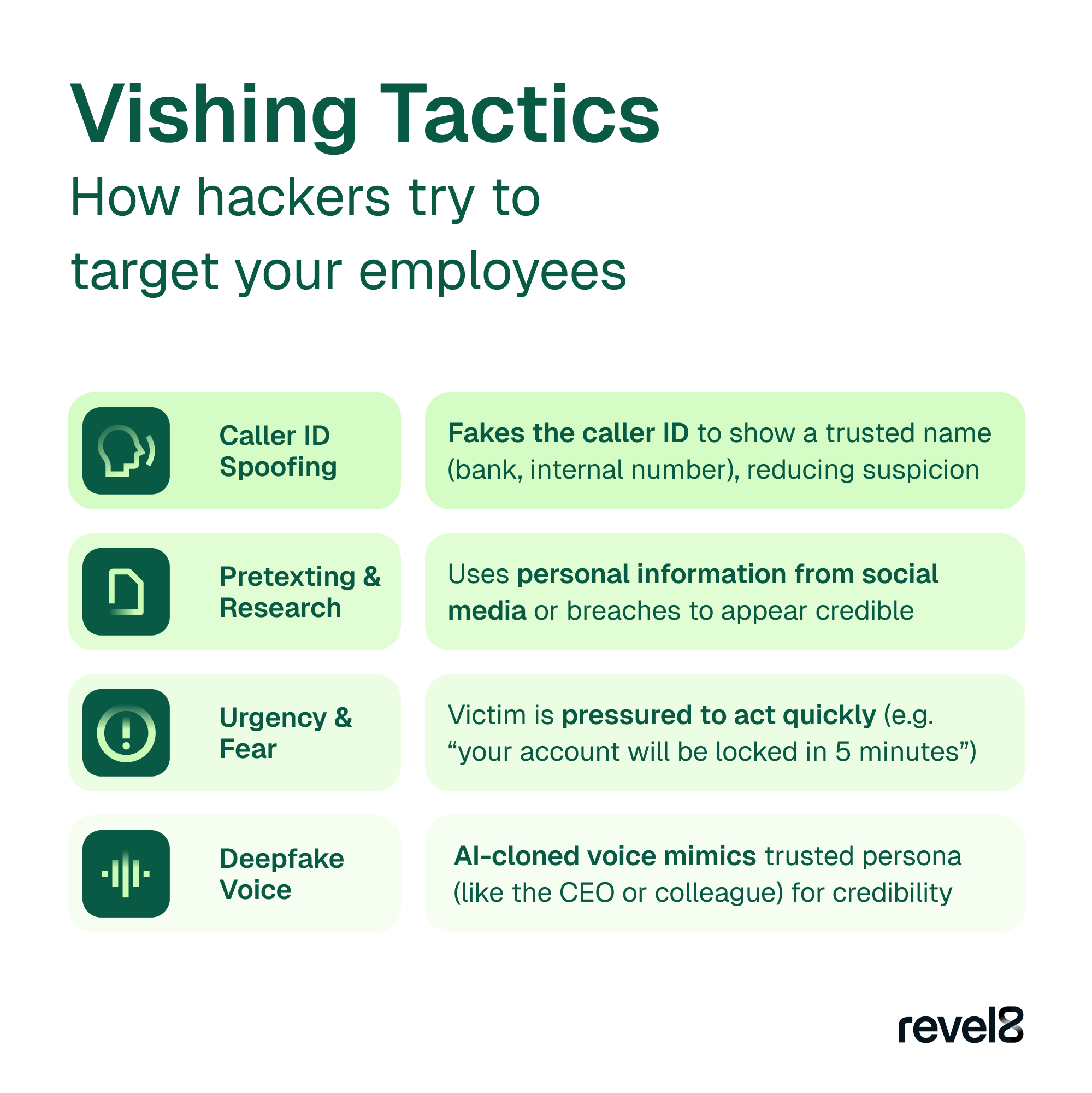

Cybercriminals exploit exactly this remaining naivety, and they do so with increasingly convincing AI-generated deepfake voices.

Vishing (short for voice phishing) refers to fraudulent phone calls or voice messages designed to trick victims into revealing sensitive information such as passwords, PINs, or payment details.

Unlike phishing emails, these attacks rely on human interaction and emotional manipulation to bypass technical defenses. Modern vishing campaigns use AI voice cloning, capable of replicating a person’s voice from just a few seconds of recorded audio. In 2025, the line between a genuine and a synthetic voice is thinner than ever.

The real danger lies in how vishing undermines trust. When we can no longer rely on what we hear, traditional methods of identity verification collapse.

Create a family safeword or simple phraseknown only to your close circle. Use it to verify identity in urgent situations and ask control questions. This prevents attackers from relying on publicly available information. If the caller cannot provide the safeword, end the call immediately.

If something feels off, end the call and reconnect via a trusted channel – for example, by calling the person back using an official or verified number.

Deepfake callers often create time pressure, use emotional manipulation, or request confidential data such as passwords, TANs, or PINs. In 2025, no legitimate organization will ever ask for sensitive credentials over the phone.

The first 20 to 30 seconds of a call are critical. Emotional stress overrides rational thinking. Taking a deep breath and think before acting helps prevent impulsive decisions.

While individuals can rely on intuition, organizations need structured defenses combining technology, training, and process design.

AI-driven deepfake detection systems can flag suspicious audio or video in real time. However, they are not foolproof. They only recognize patterns from known AI models, meaning new ones may bypass detection. Detection results are probabilistic, not absolute, and maintaining such systems can quickly strain security teams due to false positives and follow-up analysis.

No critical business process should rely solely on voice or video identification. Introduce multi-factor verification, including callbacks, internal codes, or secondary communication channels.

Regular, realistic simulations are more effective than static e-learning. Employees should experience simulated vishing and deepfake scenarios to build instinctive responses. Experiencing a similar situation in a safe environment allows them to react correctly in a real attack.

Organizations should make mutual verification between employees easy and secure, for example by using an internal directory or secure verification app. Without such systems, enforcing cybersecurity policies can slow down productivity and collaboration.

We are at a turning point. Just as society learned not to trust photos blindly, we must now redefine what “authentic” means in the age of AI-generated voices. The ability to question, verify, and remain calm will become one of the most valuable digital skills for both individuals and organizations.

At revel8, we believe that awareness only works when practiced. Our AI-powered attack simulations prepare teams for real-world threats such as phishing, smishing, and deepfake voice scams. By combining OSINT-based risk profiling, gamified learning, and AI coaching, revel8 helps organizations build lasting resilience against modern social engineering attacks.